Am I the only one scared by the massive use of text-generating tools like GPT-3?

GPT-3’s little brother “ChatGPT” is here… I don’t know about you, but, for my part, I’m worried about the possibility of using similar systems to massively spread fake news…

GPT-3 and online disinformation

The use of the GPT-3 language model to create online disinformation is a growing concern as the technology becomes more widely available. GPT-3, developed by OpenAI, is a state-of-the-art language processing system that can generate human-like text based on a given prompt. This ability makes GPT-3 a powerful tool for generating fake news and other forms of online disinformation.

One of the dangers of using GPT-3 for disinformation is that the text it produces can be difficult to distinguish from genuine content. GPT-3 is trained on a vast amount of text data, including news articles and other sources of information, which gives it a high level of realism. This makes it possible for GPT-3 to generate fake news articles that are nearly indistinguishable from real ones, even to the trained eye.

Another concern is that GPT-3 can be used to create large amounts of disinformation quickly and easily. Unlike humans, GPT-3 does not tire or make mistakes, and it can generate text at a much faster rate. This makes it possible for a single individual to create a large volume of fake news and other forms of disinformation in a short amount of time.

Better prepare yourself

You would certainly recognize that an article was written by an AI tool, right?!

Well, if you think the first part of this article was written by a human, then, I can tell you, you are not quite ready for the future of online (dis)information…

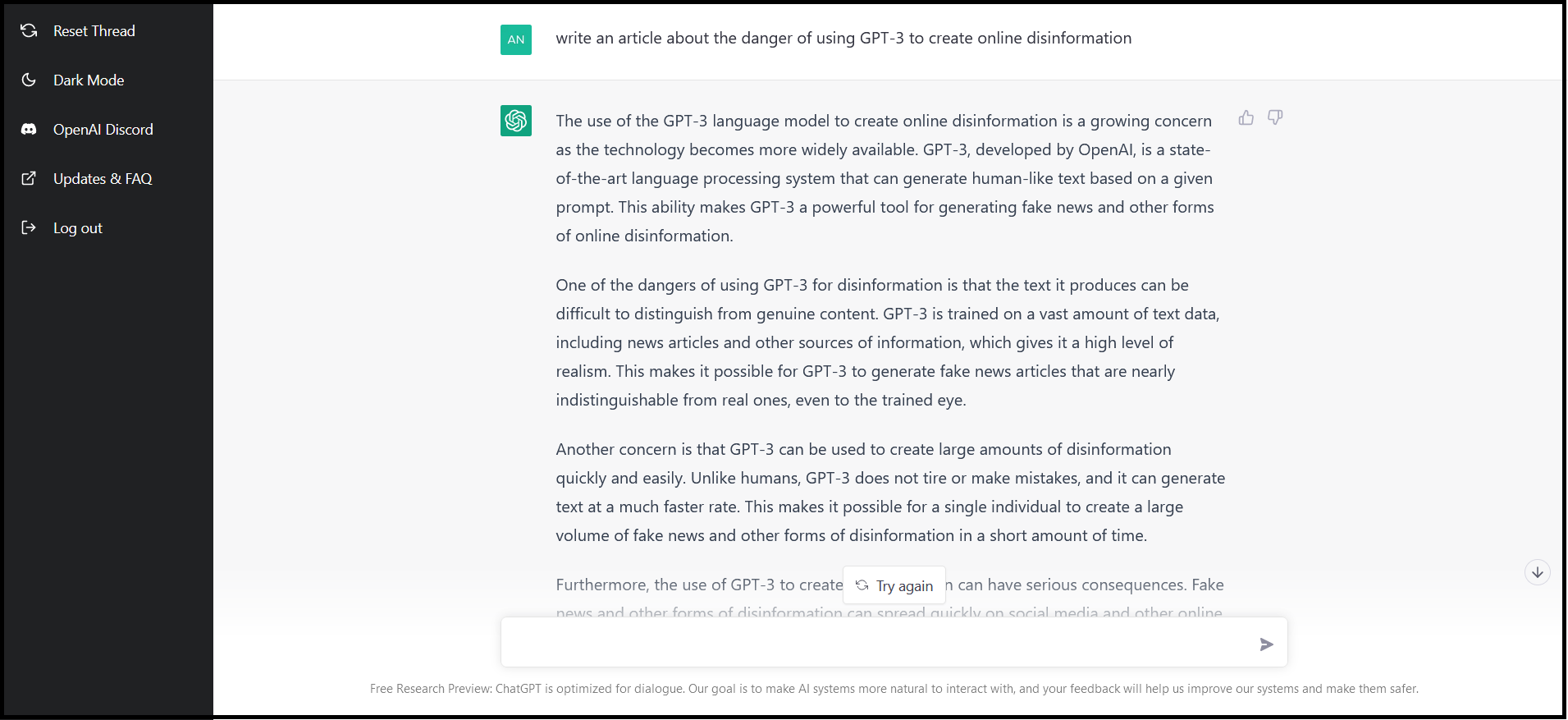

Yes, the first part of this article has been entirely produced, in less than 5 seconds, by ChatGPT, GPT-3’s little brother that specializes in the creation of human-like texts and dialogues (see screenshot below).

How does it work?

The principle of ChatGPT is simple: just like other generative AI tools, you give it a prompt (a sentence, a question or an affirmation) and the system gives you a well-structured answer in real time.

To generate new text, ChatGPT is using a machine learning technique called Reinforcement Learning from Human Feedback.

The basic idea here is that (1) a beta version of the AI system is created, (2) a human operator trains the systems by providing both questions that could be asked by a user and/or answers that should be given by the AI system, (3) periodically, a set of answers given by the AI system is sent to a human operator that ranked them by quality, and (4) the human’s ranking is used to train a reward function to refine the system.

You fuel the system with lots of data and perform (2), (3), and (4) many (many) times and you end up with a system that gives you structured (and, on average, pretty good) answers to almost any question/query you can imagine.

One of many generative AI systems

Are these AI generative techniques any new? No. The same AI training processes are already used to create many types of online content:

- image generation -> you give the system a prompt/text and it generates realistic images or arts (e.g. DALL-E 2),

- semantic image-to-photo translation -> you give the system a sketch or drawing and it generates a realistic version of the image,

- image-to-image conversion -> you give the system an image (or a series of images, i.e. a video) and it changes some aspects of the image in a pre-determined way (e.g. deepfakes),

- video prediction -> you give the system the first part of a video and, based on temporal and spatial elements, it “predicts” how should the future of the video “usually” look like or detect potential anomalies,

- music generation -> you give the system a series of songs produced by an artist and it generates a new “similar” song,

- and many more.

Today, AI models are far more capable than we usually think. They are still not 100% reliable and remain, most of the time, not very accessible to the general public, but the trend is changing: AI generative systems are going mainstream, and we are just beginning to see the effect this will have on societies.

Threat to democracy, trust…

As mentioned in the first AI-generated part of this article, democratisation of new AI generative systems allow individual, small groups of people or foreign countries to launch rapid disinformation attacks - attacks in which fake news is disseminated quickly and on a large scale.

This is of particular concern given the information society we live in. Some forms of disinformation can do their damage in hours or even minutes. Misinformation can be easy to debunk given enough time, but extremely difficult to do so quickly enough to prevent it from inflicting damage (think about election days).

Also, given the way it is trained, a text generative AI system can sound very plausible even if its output is false. This has been observed by many people in the case of ChatGPT: you can’t really tell when it’s wrong unless you already know the answer to a question.

All of this brings questions relating to the trust one should give to such system and the way we (or organisations) should use them. Our only defence seems to be education here: we need to make sure that young people and (let’s not forget them) older generations are aware of the limitations of these systems. More than ever, we need to stress the importance of not believing everything we read, watch and listen on the web.

…and to our own imagination?

On a more anecdotal level, whenever I read about the launch of a new generative AI system, I always wonder if using external tools to help us create content, whether it’s text, image or video, doesn’t limit our own imagination.

Let me explain.

Generative AI models depend on the data used to train the system. Ideally, a company developing a generative AI system will train its system on as much text or images it can. But these data are finite and cannot express situational/temporal elements or feelings. They remain impervious to content that literally cannot be translated into informational data.

Moreover, the model created will generate the “best” statistical results, the one which seems the most statistically appropriate given the request. But what makes an article, image or whatever content impactful or remembered is not its tendency to be close to the mean, but rather its tendency to deviate from what has been done/said/produced before and to shock or question people.

Content creation is not only about ever more information (i.e. data) or codified structure, it’s also about timing, feelings, beliefs, emotions, etc.

Yes, these generative AI systems can help create massive and personalized social media marketing campaigns (and yes, their potential negative impacts must therefore be mitigated), but you should better go your own way if you really want to create innovative, meaningful and impactful content (your brain would probably still be your best ally here).

“Computers are useless. They can only give you answers.” (Pablo Picasso)

While testing ChatGPT earlier this week, I was surprised by the speed and accuracy of the system. I immediately thought about the potential abuses that could be caused by such an AI system and the biases that would certainly come from the way the system had been trained.

Quite naturally, I wanted to write an article to alert people about the importance of being aware of the risks that these kinds of AI systems could create if used with bad intentions given the quality of the structure of their responses and the speed at which they can create (almost) infinite different contents on a specific topic.

Now that I’m slowly finalizing this short article, I’m taking one last look at what was concluded by the machine when I asked it to “write an article about the danger of using GPT-3 to create misinformation online”. It says:

“The use of GPT-3 to create online disinformation is a growing concern. The technology’s ability to generate realistic text and its speed and efficiency make it a powerful tool for those who seek to spread fake news and other forms of disinformation. It is important for individuals and organizations to be aware of the dangers of GPT-3 and to take steps to mitigate the risks.”

I have trouble admitting this, but I couldn’t agree more…

DecodeTech publishes opinions from a wide range of perspectives in hopes of promoting constructive debate about important topics.

The author works for the European Commission. The opinions presented in this article reflect the personal opinion of the author only and do not constitute an official position of the European Commission.

Categories:

Picture credits:

August Kamp using DALL-E 2